Some Bets on LLMs

What is the purpose of Language Models

What is the purpose of Language Models

An old legacy

Even before GTP emerged, I had a discussion with Laurent El Ghaoui on their capabilities. It was the beginning of Word2Vect and Mikolov’s paper Efficient estimation of word repre- sentations in vector space, echoing old properties of Self Organising Maps when they where used on texts.

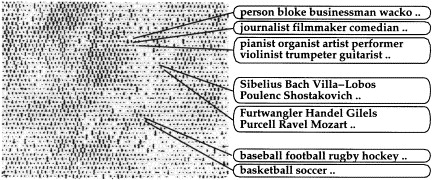

Not a lot of researchers remember the proximity maps that one could for instance found in Deboeck, Guido, and Teuvo Kohonen, eds. Visual explorations in finance: with self-organizing maps. Springer, 1998. At the time we were talking of prototypes and no embeddings, but they were exactly the same.

Have a look at this old image from the WEBSOM series of papers: Kaski, Samuel, Timo Honkela, Krista Lagus, and Teuvo Kohonen. “WEBSOM–self-organizing maps of document collections” Neurocomputing 21, no. 1-3 (1998): 101-117. At the time I was a research engineer, in charge of Embedded AI, at the Renault Research Center in parallel with my PhD thesis and implemented them to cluster patents.

With Laurent, we were discussing what would be the ultimate goal of Language Models, and we conclude that if it would be possible to mask randomly a lot of words to replace them by their grammatical, or may be semantic, function (like noun or verb or noun:place, etc), we could obtain statistically a machine understanding the structure of the language, with the limitations of the frequentist approach I later documented in the paper “Mathematics of Embeddings: Spillover of Polarities over Financial Texts” (published in 2024 but with a first version available in 2021 under the name Do Word Embeddings Really Understand Loughran-McDonald’s Polarities?).

The revolution of Large Language Models (LLMs)

When LLMs emerged and got popular with GPT3, some researchers immediately announced the rise of Artificial General Intelligence, claiming that these machines will be able to know things. It was the start of a debate, that can be summarised as Sam Altman vs. Yann Le Cun: either adding always more data is the way these machine will reach AGI, either that without a World Model it will never be possible.

From the beginning (no obligation to believe this), I am on the side of the need of structure. Initially because of an analogy of what I witnessed during my PhD time: it was more or less when Neural Nets succeeded to detect textures in image processing. Until then, a plank in wooden texture has been perceived as a lot of small shapes, and researchers where using a lot of contrast indicators with a complex combination of thresholds, difficult to fine tune. And these Neural Net could do the job.

The power and the trap of anthropomorphism

This was for me the same property: being able to split the style from the content, that GPT (Generative Pre-trained Transformers) were doing, and nothing more.

The fact that their interface is natural for humans (text), is simultaneously very useful (I remember Rodney Brooks saying that the main difficulties for humans to use learning machines will be the complexity of their user guide), and a trap (because of the anthropomorphic implicit message that it conveys: ’‘since it speaks like humans, it is human’’).

But I somehow had to comply and for instance call LLMs ‘’A.I.’’ even if I largely prefer the term coined by Alain Trouvé of ’‘Cheap Intelligence’’.

What did you expect?

It is now clear that LLM’s knowledge is corrupted, either because data are too noisy in a very generic sense (a lot of non interesting texts and a lot of texts that are not curated), either because without a world model, that has to be built by humans, the correct way to combine texts is impossible to fine-tune (as of today, builders of LLMs are generating tons of texts from templates to correct from biases in inaccuracies).

The wave of RAG (Retrieval-augmented generation), that is, according to me, not good enough and will ultimately be replaced by something else is clear: we want to replace the noisy apparence of knowledge stored in LLMs parameters by sources of truth. For the ones not familiar with RAG (implemented in Google’s NotebookLM solution is you want to try it), it is an attempt to attract the probability distributions used to generate answers (or continuations of sentences) towards a set of texts you trust.

Agentic AI goes in the same direction: using the capability of LLM to speak English (or any other language) to map the requests of the user to deterministic components and for the output use a LLM again to wrap English sentences around the result.

We do not trust the apparence of knowledge stored in LLMs any more

All these efforts are going in the same direction:

- inhibit or erase the apparence of knowledge LLMs have stored

- keep only their capability to ‘speak human languages’

- force them to use texts we trust.

This last point ‘texts we can trust’ means that we now understand we will never get veracity, we need integrity of the answers (thank you Christine Balague for the point).

I am not saying that fully separating the style (the grammatical and semantic properties of sequences of words) from its content (the expression of knowledge) is easy and I am not saying it is not an interesting philosophical question (read Wittegenstein’s books if you have a doubt). I am just saying that knowledge cannot be captured doing statistics on texts.

Time for a prediction: my bets

Since I am very impressed by Rodney Brooks’ prediction scorecards, let me do one (indeed I do it in private for long):

and will get the knowledge they use from external, human chosen, sources.

Do it is on Brooks’ way:

- by the end of 2027, all solutions based on languages will use external deterministic sources, chosen by humans.

- before 2030, RAGs will be replace by another approach that will not only attract the attention of the algo to reference texts, but that will remove knowledge from its parameters.