EMINES Winter School on AI (UM6P Ben Guerir)

Next Generation AI and Economic Applications

EMINES Winter School (UM6P Ben Guerir)

I have been invited to give a talk at the 2025 EMINES Winter School. It took place on the Ben Guerir campus of the UM6P University, close to Marrakech.

The line up of speakers was

- Eric Moulines on How Generative AI Works: A Guided Tour of Its Key Algorithms

- Michael Jordan on An Alternative View on AI: Collaborative Learning, Incentives, and Social Welfare

- Yann LeCun on Recent Advancements in AI and Major Challenges

- Laurent Daudet on Agentic AI: Harnessing Generative AI for Complex Workflows

- myself on AI and Financial Applications

- Btissam El Khamlichi on Pioneering Next-Generation AI for Africa’s Future: Insights from AI Movement-UM6P

- Benoit Bergeret on Socio-Economic Impact of Next-Generation AI

These talks were augmented by two round tables:

- Bridging Theory and Applications in Next-Generation AI

- Enterprise: What’s the Real Value?

And by lightning talks of winner of a hackathon sponsored by SIANA and ONCF.

Yet another talk on AI for Finance?

I took time to create a full set of new slides for this talk. Not presenting a recent paper, but rather the view I developped thanks to my discussion since the launch of the Finance and Insurance Reloaded (FaIR) initiative at Institut Louis Bachelier in 2017.

The book Machine Learning and Data Sciences for Financial Markets - A Guide to Contemporary Practices I co-edited with Pr Agostino Capponi was another occasion to think about how our field is and will continue to be impacted by Machine Learning in general.

Let me remind you the main elements I presented:

Start by going back to the functions of the financial system

and that for, the paper “A functional perspective of financial intermediation” published by Robert Merton in Financial management (1995): 23-41. is a good start, it tells us that the 6 main functions of the financial system are

- A financial system provides a system for the exchange of goods and services.

- A financial system provides a mechanism for the pooling of funds to undertake large-scale indivisible enterprises.

- A financial system provides a way to transfer economic resources through time and across geographic regions and industries.

- A financial system provides a way to manage uncertainty and control risk.

- A financial system provides price information that helps coordinate decentralized decision-making in various sectors of the economy.

- A financial system provides a way to deal with asymmetric information and incentive problems when one party to a financial transaction has information that the other party does not.

Remind the main features of A.I.

On another side, researchers went from Statistical Learning (adjusting the parameters of a statistical procedure each time new data occur, Vapnik and Chervonenkis in 1970) to Machine Learning (the underlying model is unknown; Cybenko in 1989 ).

It went along the continuous increase of the capabilities to Record, Store, and Process Data.

An A.I. system is an engineered set of components, including learning capabilities, targeting to produce a feature, as such, it is a General Purpose Technology: it does not provide any solution by itself, it needs investments (capital and human) to produce secondary innovations to answer a specific set of needs in a domain.

Stylised functions A.I. can provide to the financial system:

- Reproduce perception of animals; It works particularly well from the begining, because there is an implicit notion of invariant and an explicit context in live perception. When the data are pre-recorded, the noise they content can be conditional to the context, that is very bad: it introduces a bias that can prevent extrapolation

- Perform statistics on very large databases; i.e. beyond computing the formula of a mean or a standard deviation, but providing meaningful statistics, i.e. sorting information from noise. Recommendation engines are typical of this kind of features.

- Solve approximatively optimization problems over a domain. Solving “exactly” low dim optimization problem does not scales in dimension; a dose of approximation is needed that for. AlphaZero is a secondary innovation of this family.

Putting All Together

The financial system is made of several functions, it needs more than one secondary innovation.

If we want to split the needs in domains where the actors are already specialized, consider these three families:

- Custom Interactions with Users, custom Products; (for narrow banks and structurers). Recommendation engines (robo-advisors and support to market makers), smooth price formation, solve the information asymmetry problems (game theory).

- Improving Risk Intermediation (investment banks, brokers); Off-line RL for trajectories of nonlinear portfolios (deep hedging), pricing interpolation (fast pricing), optimal trading, trajectory-wise portfolio construction.

- Better Connection with the Real Economy. Use of alternative data (satellite images, geolocation, credit cards, texts, etc) to take better-informed decision.

Without efforts, you have nothing more than cheap intelligence (not is the sense of low cost… but low quality).

Main Take-Aways:

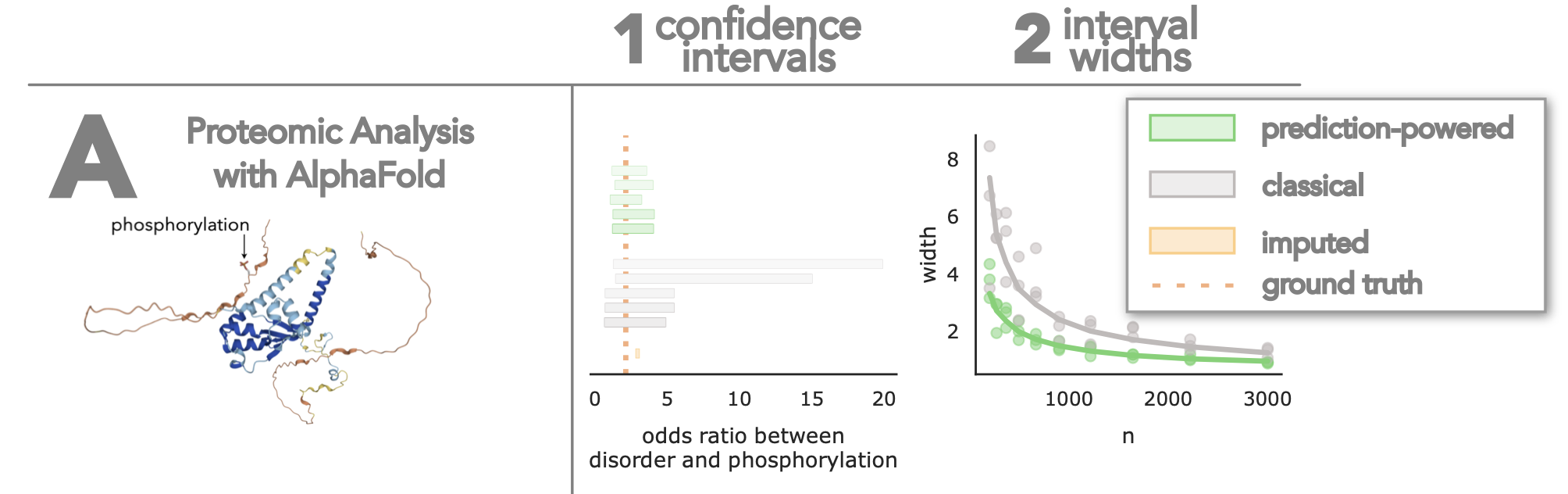

Synthetic data are useless without correction

Michael Jordan insisted on the way results on synthetic data have to be corrected to be used (see his paper Angelopoulos, Anastasios N., Stephen Bates, Clara Fannjiang, Michael I. Jordan, and Tijana Zrnic. “Prediction-powered inference.” Science 382, no. 6671 (2023): 669-674.). This is compatible with the paper I have with Rengim Cetingoz “Synthetic Data for Portfolios: A Throw of the Dice Will Never Abolish Chance.” (2025).

The formula of Michael’s paper to get an estimand \(\bar Y\) for the mean (but it provides more generic viewpoints) is

\[\bar Y = \frac{1}{N}\sum_j f(\tilde X_n) - \frac{1}{n} \sum_i \left( f(X_i) - Y_i\right).\]

where \(f(X_\omega)\) is the synthetic data generated from the iid noise \(X_\omega\) and \((X_i,Y_i)_{1\leq i\leq n}\) is a small labelled dataset.

An implication of this formula (it is easy to check that the two sums are independent):

- it is impossible to “do better” (in term of bias or variance) than the small sample out-of-sample estimate (the right sum): \(\frac{1}{n} \sum_i \left( f(X_i) - Y_i\right)\),

- nevertheless if your generative process \(f\) is good (meaning that \(f(x)-y\) is small), the large sample synthetic dataset can help.

The need for a World Model

Yann Le Cun, as usual insisted on the need of a world model to do better than what Large Language Models (or the current generative use of GPT) can do.

What does it means?

Instead of trying to link directly the output to the input of a model, the World Model viewpoint states that inputs should get a representation in a feature space that, once actions (or controls) are chosen, produces an output.

More formally:

- the input \(X\) is embedded in a state space to get \(\tilde X\),

- there is an interaction model \(F(\tilde x, \tilde u)\) that gives the value of taking the action \(u\) given

- the state space is at \(\tilde x\)

- the control \(u\) in the natural space has an embedded representation too \(\tilde u\).

Thanks to this, each time you will need an output, you solve the problem of \(\arg\min_u F(\tilde x, \tilde u)\) to get the output \(u\).

The open questions are

- what is the best feature space to embed the state and the control?

- how to train the embeddings \(x\mapsto\tilde x\), \(u\mapsto\tilde u\) and \(F\)?

- how to obtain a meaningful decomposition of an action in several steps?

The Cambrian Explosion of A.I.

Benoit Bergeret gave a visionary talk on the future perspectives of AI.

He made a funny analogy with the Cambrian Explosion, where on earth (541 million years ago)

- we saw a Rapid Diversification of species

- the Development of Hard Parts

- the emergence of Complex Ecosystems.

He compares with the current state of AI/ML:

- Rapid Diversification of species stands for diversification of models: transformers, convolution networks, xgboost, dropout techniques, RAG, distillation, etc.

- the Development of Hard Parts corresponds to: structures that are difficult to move: TPU, NVIDIA, cloud vs edge, etc. I would add smart sensors (LIDAR, smart glasses, etc) as well as exoskeletons,

- and the emergence of complex ecosystems (intrication of MLOps, Data Curation, annotations, agentic systems…).

Jevons’ Paradox for energy consumption

About the energy consumption, Benoit reminded us the Jevons’ paradox (1865): more efficiency of steam engines did not led to less consumpution of coal, but more, since the use cases exploded.

The organisers of the conference

Organizing such a conference in Africa, and having a speaker about the African Women in Tech and AI made a lot of sense. Thank you organizers to have all of thinking about the perspectives from this continent:

- Mohamed El Rhabi

- Eric Moulines

- Nicolas Cheimanoff